After a semester of exploring new media, I’ve decided to reflect on the digital experiences I’ve had and decide which lessons are worth taking with me as I continue to venture into the ever-changing world of technology. I’ve compiled a list of the four biggest lessons I’ve learned throughout my digital adventures that one should follow when conquering new media.

1. Create your own new media. As we’ve discovered, we’re all submerged in a world that is dominated by technology (whether we like it or not), and we’re dependent on it in order to function in society. Technology structures the way we maintain communication, from the use of cell phones to Facebook, to how we  educate ourselves, from Google searches to social bookmarking. But the biggest lesson I’ve learned from our use of technology is that we have to learn how it works and the best way to do so is by creating our own new media. In the introduction to Douglas Rushkoff’s book Program or be programmed: Ten commands for a digital age, he stresses that in order to maintain control in a world dominated by digital technologies, one must learn how they work to be able to manage his or her everyday life. Learn HTML, start a blog, or make a Twitter. Don’t you want control over your reality?

educate ourselves, from Google searches to social bookmarking. But the biggest lesson I’ve learned from our use of technology is that we have to learn how it works and the best way to do so is by creating our own new media. In the introduction to Douglas Rushkoff’s book Program or be programmed: Ten commands for a digital age, he stresses that in order to maintain control in a world dominated by digital technologies, one must learn how they work to be able to manage his or her everyday life. Learn HTML, start a blog, or make a Twitter. Don’t you want control over your reality?

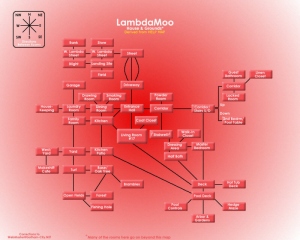

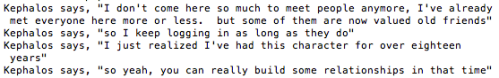

2. Embrace technology. The benefits of using many of the new media technologies that I’ve written about throughout the semester are seemingly endless. Specifically, social bookmarking sites like Pinterest, Google Reader, and Delicious make gathering information easier and faster than ever before and supplement traditional media forms, such as newspapers and encyclopedias. Take advantage of our culture of shared thinking by connecting with others on LambdaMoo or by sharing your “random, fleeting observations,” which Julian Dibbell describes in “Future of Social Media: Is a Tweet the New Size of a  Thought,” on Twitter. Without a doubt, I’ve learned that there’s no harm in trying these technologies, which aim to comfort us and make our lives easier. You’re only doing yourself a disservice if you don’t.

Thought,” on Twitter. Without a doubt, I’ve learned that there’s no harm in trying these technologies, which aim to comfort us and make our lives easier. You’re only doing yourself a disservice if you don’t.

3. New media isn’t perfect. Danah Boyd writes in her article “Incantations for Muggles: The Role of Ubiquitious Web 2.0 Technologies for Everyday Life,” “As you build technologies that allow the magic of everyday people to manifest, I ask you to consider the good, the bad, and the ugly.” While you should take advantage of the benefits of new media, don’t forget that technology is not perfect, as I’m sure many of you have frustratingly experienced before. Your iPhone could break at any minute, your Wi-Fi could go down without warning, and your Facebook could become hacked. While new technologies usually generate utopian hopes for its users, as Fred Turner describes in “How Digital Technology Found Utopian Ideology,” it’s important to be aware that technology isn’t actually foolproof. In the same token, it’s important to question what you see when using these new technologies. In an age where everyone is a publisher  online with sites such as Wikipedia, information is bound to be wrong. We can’t expect that what we’re reading is perfectly correct, or else we all may fall into a culture of misinformed people.

online with sites such as Wikipedia, information is bound to be wrong. We can’t expect that what we’re reading is perfectly correct, or else we all may fall into a culture of misinformed people.

4. Look out for the future. I know it may seem impossible to predict what’s coming next in regard to new media, but I think it’s important to the process of deciding how to interact with the technology around us. As I wrote about it my last post, I believe that technology is headed toward a reduction of information with the use of imagery over text as seen in technologies such as Pinterest and Instagram. It’s important to be prepared for new technologies by educating ourselves about imagery as a communication form, for example, especially because that new media may end up being a part of our everyday lives.

Now go forth and dive into our media-filled world.